Benjamin Gosney and I have published some audio as a ‘digital album’ on the bandcamp website: cardboardvolcano.bandcamp.com/album/on-the-cusp-of-something We also made a webpage and put it on the worldwide web at cardboard-volcano.co.uk

Benjamin Gosney and I have published some audio as a ‘digital album’ on the bandcamp website: cardboardvolcano.bandcamp.com/album/on-the-cusp-of-something We also made a webpage and put it on the worldwide web at cardboard-volcano.co.uk

Seeing announcement of an album by Benoît and the Mandelbrots reminded me of the 2012 HELOpg album lptp that is available online at http://lptp.helopg.co.uk/

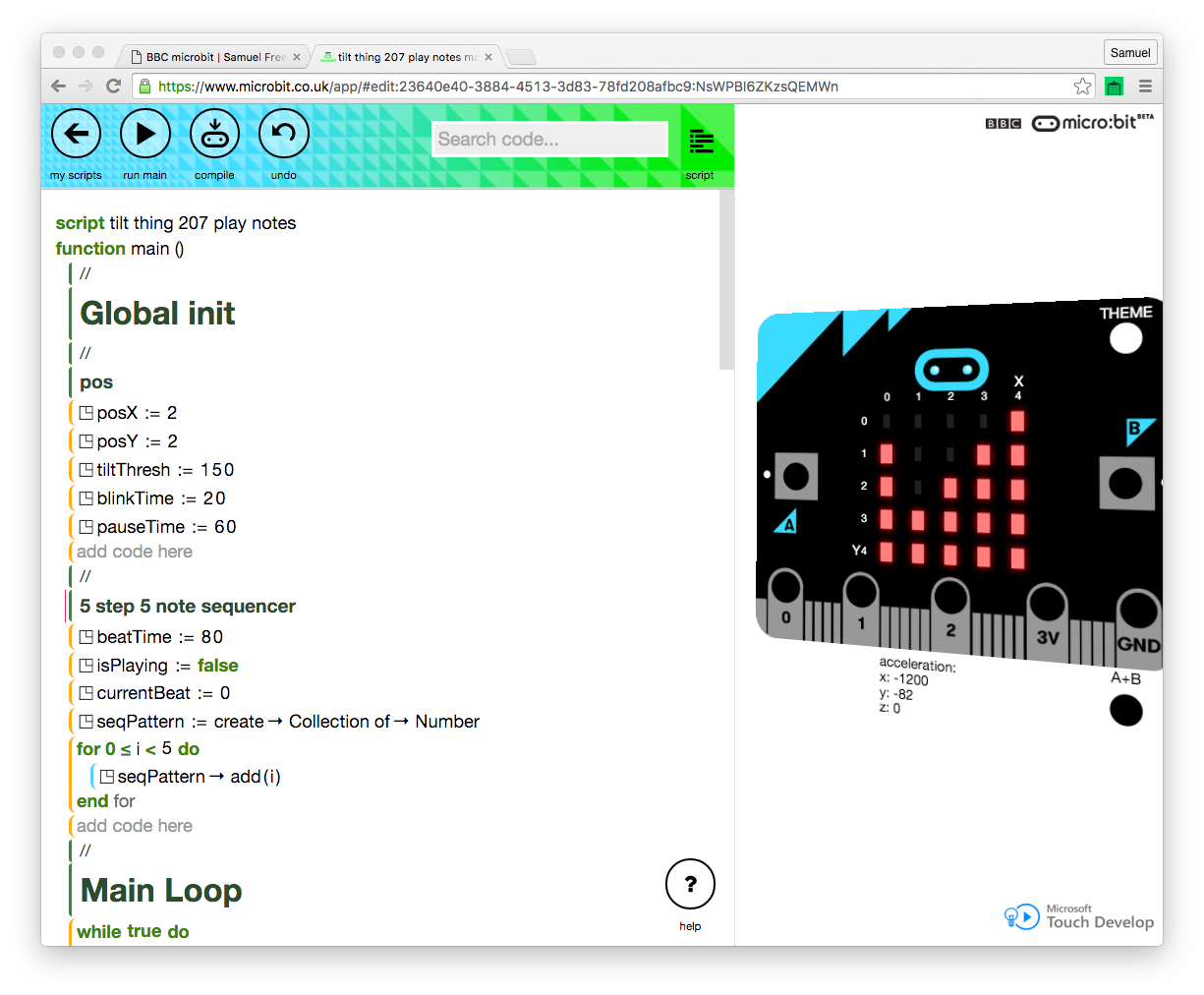

The long wait is over: after a number of delays, the BBC microbit has had its release today. Most the following was written at the end of January 2016, and has been sat in draft form since then… so from today’s perspective it starts with a link to old news, but it is what it…

In addition to the html version of my PhD thesis — through which the portfolio of works can be accessed — there is now a copy of my thesis on the University of Huddersfield Repository: http://eprints.hud.ac.uk/23318/

In September 2013 I recorded a reading of ‘sentence 59’ for the Bizarre Bazaar project by David Mooney. The following description of the project is from the Opaque Melodies website, and below that is the finished piece on SoundCloud:

December 2014 has seen the release of Some Some Unicorn and The Golden Periphery. The album is on bandcamp, and on Spotify.

There’s a thing being called Art City going on in Stoke-on-Trent (a five-year programme that launched in September 2014). The KULES Art Residency is part of that, and their ex-factory exhibition runs November 8th-29th, 2014: more info from the supporting AirSpace Gallery. I provided art-technology and programming support for Leslie Deere’s Laserdome installation found on…

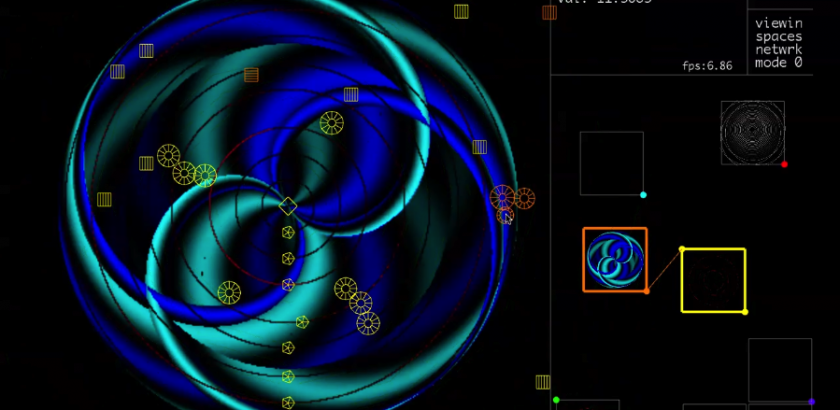

I was at the Live Coding Network Doctoral Consortium on Thursday last week. This document has notes I made afterwards. Here’s a photo of me and sdfsys, as tweeted by @pauwly: Samuel Freeman demos his doctoral sonic livecoding software – fascinating! #livecoding pic.twitter.com/4rD0Sv9QHd — Pauwly (@pauwly) September 25, 2014

Laurence Counihan clicking a heart shaped glyph today triggered a notification to me that brought one of my video recordings form 2010 to my attention… I couldn’t remember what happens in ‘live code Max MSP 20100220‘, so I watched it again. live code Max MSP 20100220 from Samuel Freeman on Vimeo. I saw that Counihan…

after some busy days, it’s time to get back to this CreativePact thing… On the 16th (two days ago) I had a quick look back at some of the ‘improvised’ videos that had been uploaded to (using this search url) and bookmarked some of them. One of those is no longer available; this one is…

A few technical hitches with rendering the video for this one… here’s a preview posted here whilst sorting that out: The video is now uploaded: Showing an improvised melodica part to an experimental work in progress titled ‘stretch’. About the work: Five stereo sound recordings (of around ten seconds to two minutes duration) have been…

This time last week I was getting ready to go to Derbyshire for the Northern Green Gathering (NGG). Whilst there I helped to install, wire, and operate a solar-powered cinema (photovoltaic panels → charge controller → 24v DC → inverter → 240v AC → laptop, projector, stereo amp…).

Several people, including Monty Adkins, who was the co-supervisor of my PhD, shared a link to an article on RedBullMusicAcademy.com which describes many of the inspiring goings on at CeReNeM – the Centre for Research in New Music at the University of Huddersfield. The thing that first caught my eye about this was the way…

Towards doing more interesting things the Raspberry Pi… see notes on my pi specific blog: sdf-rpi.blogspot.co.uk

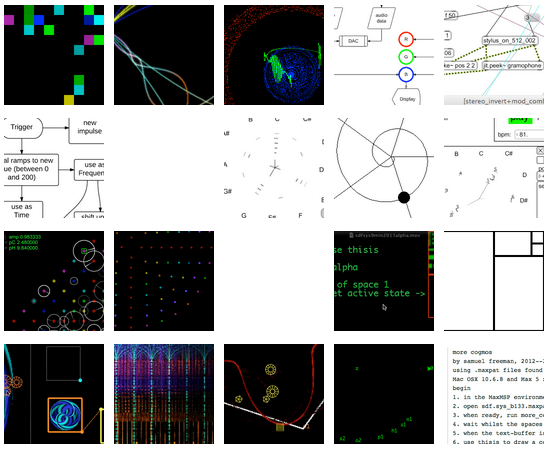

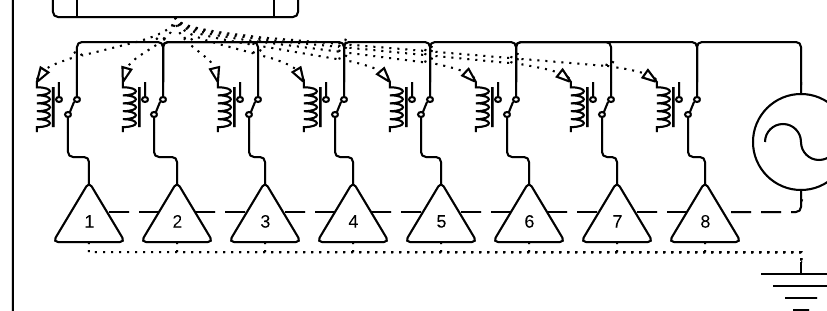

A data matrix from sdfsys exported as a png: The data was drawn using the macro syntax of thisis; six slightly different macro sequences were recorded for putting points relative to points that have been put, and another macro is defined for drawing six circles at a time with those points. The delay prefix to…